What if social media algorithms were bipartisan?

"You don’t need to change the personal behaviors of lots of people... you just need to change some code, essentially"

Social media is driven by engagement, and few things are quite as engaging as us vs. them. Dunking on others is a great way to go viral.

Facebook and Twitter posts about political out-groups are shared about twice as often as posts about in-groups, one study found, and language about out-groups is a strong predictor of angry reactions online. But what if social media algorithms didn’t reward that kind of content?

Tweaking the algorithms to favor content that appeals to diverse groups is one solution researchers hope could make for a healthier social media experience. A paper by researchers Aviv Ovadya and Luke Thorburn calls for “bridging systems” which would “increase mutual understanding and trust across divides, creating space for productive conflict, deliberation, or cooperation.”

“The hypothesis of bridging is that if you identify things for people to pay attention to which in some sense bridges divides between people, then that’s a good thing for social cohesion,” said Thorburn, a doctoral researcher at the UKRI Centre for Doctoral Training in Safe and Trusted AI at King’s College London.

Heightened political polarization in the West is hard to attribute to just one factor, he said, but changing the way algorithmic systems work is one manageable way to combat its rise.

“You don’t need to change the personal behaviors of lots of people and convince them to voluntarily change their behavior, you just need to change some code, essentially,” he said. “It’s not claiming it will solve the problem and make everything better completely, but it seems like there’s a chance that it will make a meaningful difference if it changes the incentives for what gets attention.”

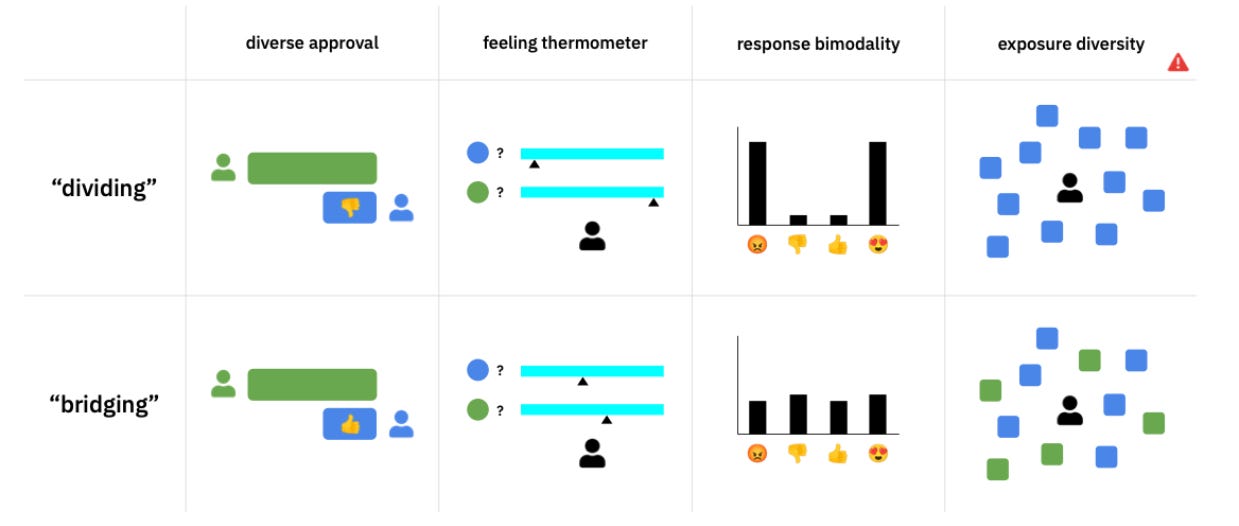

He and Ovadya offered several ways to move from engagement-based social media to bridging-based, including ranking content higher if users who would normally disagree with each other simultaneously support it or have a positive interaction, something known as “diverse approval.”

Another method called “feeling thermometer” asks some users how they feel about political parties, uses that data to build a model of how other users would respond, and links it to content they’ve recently seen. “If you do that enough times to enough people you can detect patterns in what kinds of content tend to reduce effective polarization,” Thorburn said. “Response bimodality” rates content by how polarizing users react to it.

The authors point to a few examples of social networks that use bridging systems. YourView, a nonprofit Australian site, offered users information about policy proposals they could add their own comments to for or against. Though no longer operational, commenters were given credibility ratings based on whether those they disagreed with viewed them as credible.

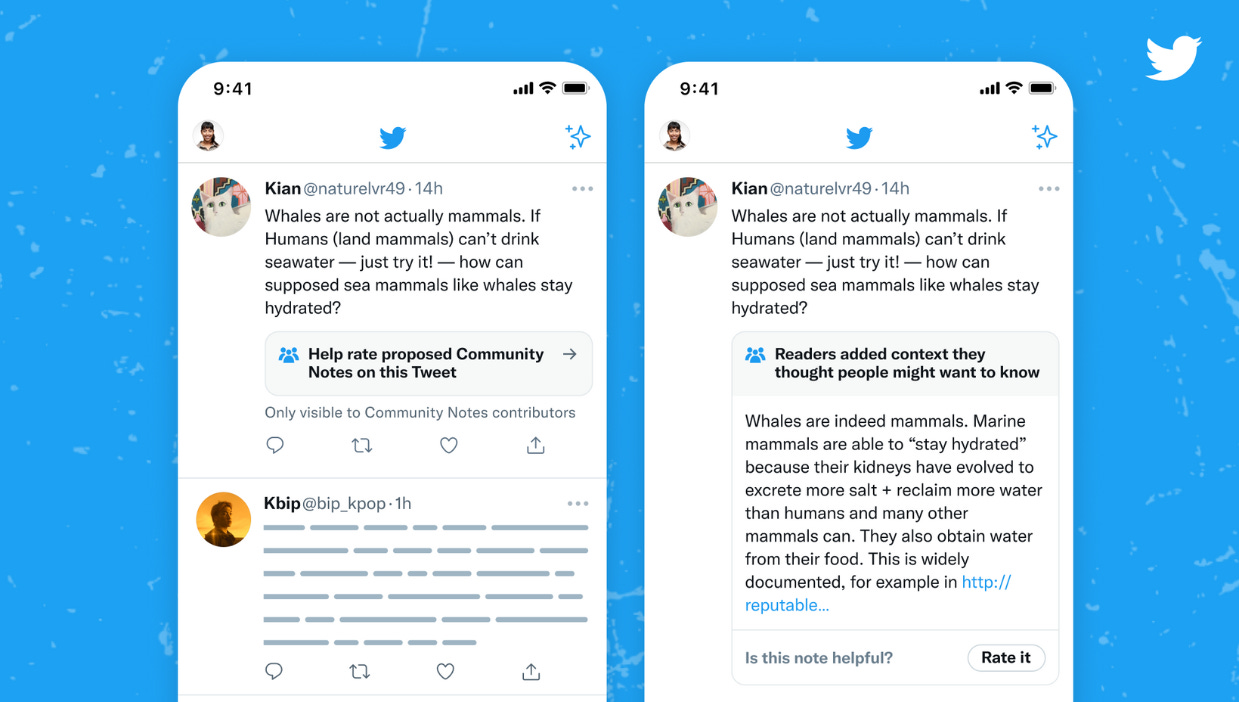

Believe it or not, they also cited Twitter for a feature rolled out last year now called Community Notes. The program allows users to add notes to potentially misleading tweets, which other users can rate as “helpful” or “not helpful,” and it takes into account whether it was rated by users with different perspectives.

Some attempts at bridging can backfire, like “exposure diversity,” which shows users content from sources outside their social media echo chambers. Studies have shown this can sometimes cause users to double down on their previously existing beliefs.

Bridging faces other challenges, they write in the paper. “Many would argue that there should not even be an attempt to bridge some kinds of divides due to the abhorrence of particular groups or their lack of respect for human rights. This implies a key question: when is bridging appropriate and when is it not?” Some groups might also punish those who engage with others outside their group dogma.

One of the greatest challenges, though, is the companies that write the algorithms, since emphasizing bridging over engagement could harm their bottom line. Bridging is less likely to be implemented by companies unless there’s an economic benefit, regulation, or sustained external pressure, they write.

“There’s probably some sort of frontier to what extent you can incorporate bridging without reducing engagement so much that the platform becomes unviable,” Thorburn said.

Social media’s rise has come as societies face mounting problems that are easier faced together than apart, from a pandemic to climate change. As Thorburn and Ovadya write in their paper, “Our social spaces should not default to divisive.”

Have you seen this?

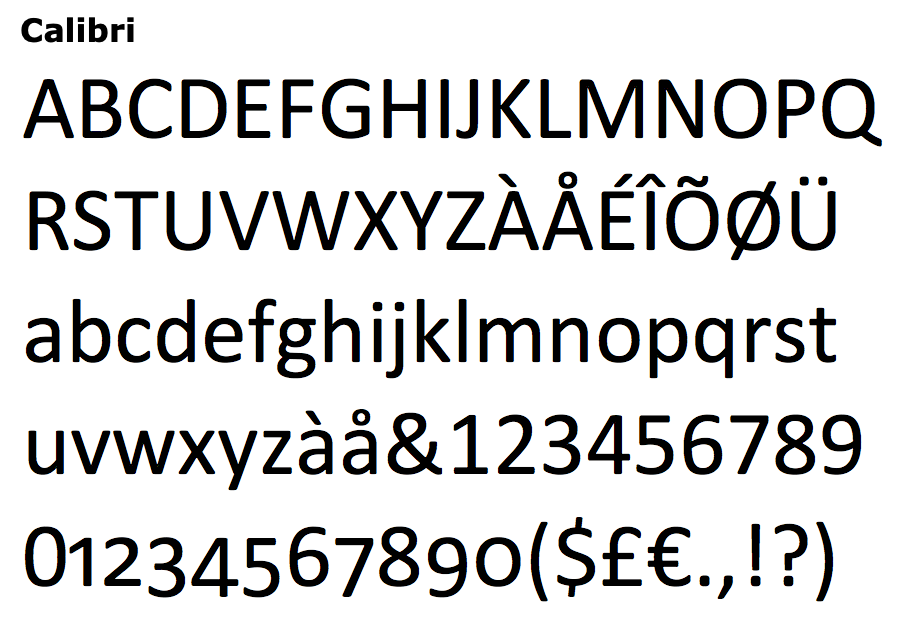

The State Department is going sans serif. Secretary of State Antony Blinken has directed all official communications and memos to no longer use Times New Roman and instead use the sans serif Calibri, per a cable obtained by the Washington Post. Calibri was “recommended as an accessibility best practice by the Secretary’s Office of Diversity and Inclusion,” it read in part, and one Foreign Service work said they were “anticipating an internal revolt.” What say you? [The Washington Post]

This Black type designer wants to help you discover the world beyond Helvetica. Vocal Type founder Tré Seals creates his typefaces based on significant moments in Black history and culture. [Washingtonian]

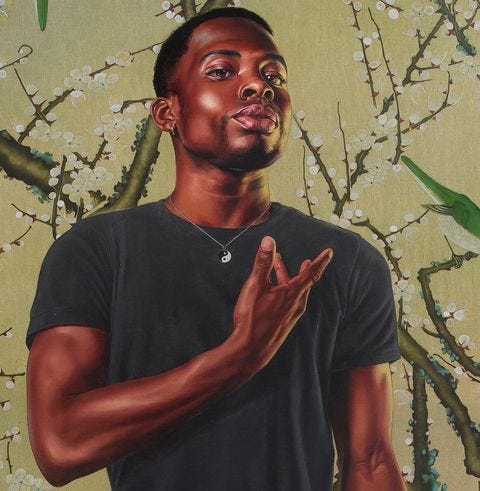

Kehinde Wiley’s new exhibition is inspired by traditional Japanese nature paintings. Colorful Realm features nine new paintings and opens at Roberts Projects in Los Angeles this Saturday, Jan. 21 through April 8. “So much of my work is about appearance, showing up and being visible and this dance between exploring the vastness of space within the minimality of this technique I find to be an interesting juxtaposition,” the artist said in a statement.

How Michelle Obama’s flexing her soft power. Liberated from the constraints of her husband’s presidency, former first lady Michelle Obama is building a new kind of post-FLOTUS platform and she’s more popular than before. [𝘠𝘌𝘓𝘓𝘖]